Organic search is a channel nearly every brand relies on, but it’s often the least understood. This is a shame because organic search can bring in some of the highest revenue and LTV of any marketing channel.

Broadly, if a link is part of the results displayed by a search engine and not an ad, it’s “organic.” That includes website, image, video, and local results in Google, and the definition goes beyond webpage results. YouTube, Amazon, Etsy, and eBay can all fall under the umbrella of organic search.

The source of obscurity is the secretive nature of search engines. They don’t want us to know exactly how their ranking process works because it will make them vulnerable to spam and manipulation.

The other part that makes organic search difficult to understand is that it’s technical. Other marketing channels have a lot of the underlying technology abstracted away with tools and automation, but search engine optimizers often have to interact with web infrastructure directly.

With this guide, we’ll demystify aspects of organic search, define the elements of SEO, and give actionable advice for executing an SEO strategy in your organization.

What is SEO? What Do SEOs Do?

Why Is Organic Search Important?

How Search Engines Work

The Ranking Factors Search Engines Look For

Top 9 Studies About Ranking Factors

How SEO is Tied to the Marketing Stack

A Framework for a Goal-First SEO Strategy

Search Engine Optimization is a Practice

What is SEO? What Do SEOs Do?

Search Engine Optimization (SEO) is the practice of receiving qualified traffic for the organic search channel by achieving more visibility on search engines. We call the people who practice this work SEOs.

To get that improved visibility, SEOs interact with each part of the marketing stack (we’ll get to what that is in a bit) to improve rankings, user experience, and convert users.

A good SEO will follow search engine news, read studies about organic performance, and read about patents to develop a theory of what works well for ranking. Then they will take the brand’s business goals and develop a strategy to reduce their website’s distance from perfect. Finally, they will measure performance as the tactics in the strategy are implemented and adjust course as needed.

Why Is Organic Search Important?

Search engines are a major source of referral traffic for brands, with Google Search being the foremost by contributing 66% of web traffic referrals from the top 250 sites. That’s an awful lot of traffic coming from Google, and we can’t afford to ignore it. It’s also why we tend to talk about Google Search more than any of the other search engines.

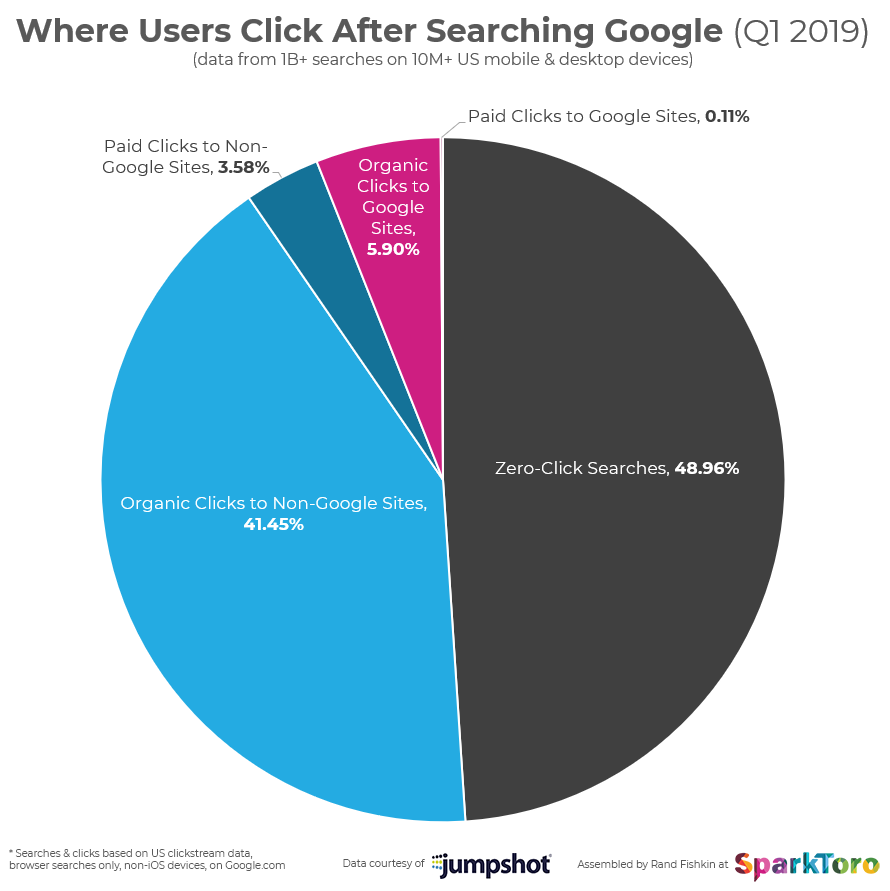

Most brands don’t ignore Google Search. They actually invest quite a bit into Google Ads, totaling $134.81 billion in revenue in 2019. This is an impressive haul for the internet titan, but paid clicks aren’t the majority of traffic coming from Google Search.

According to Jumpshot and Sparktoro, there are over ten times as many organic clicks than there are paid clicks coming from Google Search:

With 66% of clicks from the top 250 referrers, and 41% of those clicks being organic, the conclusion is clear. We can’t ignore Google Search, and web search in general, if we want to reach our addressable market online.

How Search Engines Work

Google once gave a simple classification for what users came to their search engine for: Do-Know-Go. In the course of our everyday lives, information needs will arise.

Whether it’s finding a deal on an electric kettle, planning a new bus route to work, or just idle curiosity, we take to the internet to resolve the information prerequisite of some future action. Google wants to service this niche, they even included this in their mission statement “to organize the world’s information and make it universally accessible and useful.”

While Google has an ambitious mission, every search engine performs more or less the same task: find everything that someone might want, and sort it all by desirability.

Crawling

It’s called the “web” because the documents on it link to each other, forming a structure that resembles an abstraction of a spider’s web. Continuing the metaphor, software that discovers web pages by traversing the web is called a spider.

For the most part, this is how search engines discover new pages: following each link they find to see where it leads. There are other methods of URL discovery, and XML sitemaps are the most important of them because they give search engines a list of URLs that we definitely want them to crawl.

A fundamental part of SEO is making sure that search engines receive our content correctly from a server. Typically we facilitate this with a list of our URLs in our sitemap, and making sure each URL is discoverable through our linking structure.

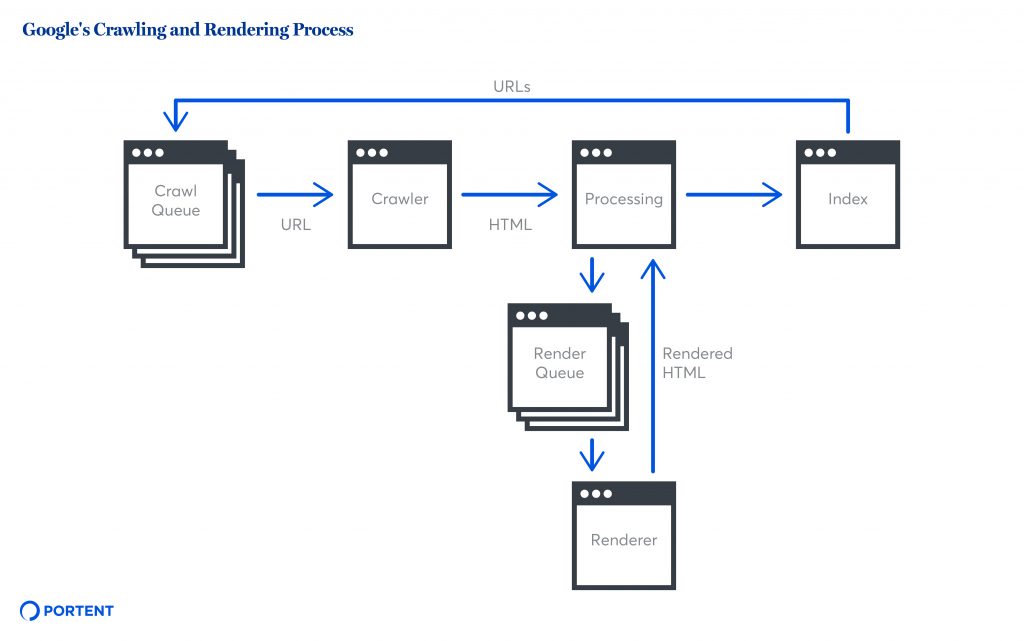

Rendering

Basic search engines (like Yahoo and Yandex) will only view our content as a series of characters with references to images and other pages embedded in it. That perception doesn’t match well with what a person will see in their browser, so advanced search engines (like Google and Bing) will use crawlers that construct pages using HTML, CSS, and JavaScript like an ordinary browser would.

This process of constructing a page in a browser is called “rendering.” Advanced web search engines like Google will render the pages they find to see which content is actually visible to people and not just in the HTML source. When Google says they use “mobile-first indexing,” they mean they only consider the content that renders on a mobile browser.

Determining how our content renders for Google and other search engine crawlers is critical. If they see a broken page structure or just a blank page, our rankings will suffer accordingly. With the Core Web Vitals becoming part of the ranking algorithm, we need to make sure our pages render quickly and smoothly, with no extra complications from JavaScript.

Indexing

An index is a data structure that provides an easy way to retrieve information stored in a database. After a search engine crawls and renders a page, they extract the relevant data and store it in an indexed database. Once a page is indexed, it is eligible to appear in search results.

Basic search engines use a variation called an inverted index, where instead of storing all of the words and page contains, they store a list of pages that contain a word. The index at the end of a book is structured like an inverted index for topics and pages. This is an efficient way to develop a set of search results, because instead of checking every page in the database for the presence of a word in a query, they just combine the page lists for each word in the query.

It works a lot like the index at the back of a book.

Modern search engines have more than just inverted indexes for words. They store data about images, videos, and even entities in the form of a knowledge graph. Recently, Google announced a new index for passages of text that might help answer a question.

Ranking

Determining which pages to display in search results is about narrowing down the candidate set of pages to what might be relevant, then sort them by quality and context.

In the past, a simple search engine might generate search results for a query for “electric water kettle” by performing these steps:

- Do keyword matching by combining the list of pages in the inverted index records for “electric,” “water,” and “kettle.”

- Narrow the list down to the pages that match the user’s language.

- Sort the list of pages using a weighted combination of a backlink authority score, a keyword presence score, and a user experience score.

- Take the first 100 pages in the sort and display them to the user.

Now, search engines take a lot of factors into consideration when ranking results. For example, they try to determine the intent behind the keyword instead of just the words used, and they try to determine if the candidate pages are trustworthy for queries related to health or finance.

The Ranking Factors Search Engines Look For

Google has said they use over 200 ranking factors for generating their search results, but what is a ranking factor? Other parts of Google’s processes are often confused as factors, but the definition is simple. Ranking factors are the features of a document that Google uses to narrow and sort a set of search results to best answer a query.

Things like user intent, query interpretation, user device, user location, Google Ads, Google Analytics, and anything related to Google’s infrastructure are not ranking factors.

Ranking factors are all about the pages Google might send users to and what they know about them. There’s a lot to know too, because pages can be judged by the company they keep.

Which brand or individual wrote the page? What is their reputation? What other websites is the page linking out to? What are their reputations? Since almost every document is part of a web of other documents, the connections between them can be analyzed to determine features pertinent to ranking search results.

What is E-A-T and Why is it Important?

Google has a quality assurance process for its search engine to make sure new features and updates are producing useful results. The people who review Google are called Search Quality Raters, and it’s a program Google has relied on since at least 2005. Google made the full handbook they give to Quality Raters public a few years ago, and in 2015 there was a major update to the way they talked about their goals for search result quality.

Google introduced a concept called E-A-T, which is short for Expertise-Authoritativeness-Trustworthiness. It’s not a new ranking factor or significant shift in how they rank websites. It’s a concise description of their ranking goals for people who aren’t as familiar with Google search in the way an organic search marketer.

“Do-Know-Go” is another pithy phrase that emerged from Google’s Search Quality Rater Guidelines, and like its predecessor, it’s a fairly handy way of classifying essential ranking factors.

E-A-T is also a signal that Google’s ranking factors are becoming sophisticated in a way that we can’t fake, exploit, or take shortcuts around. Placing more keywords in header tags won’t improve our rankings if we aren’t also providing accurate, trustworthy, and beneficial information to the end users.

Expertise

Expertise isn’t a new way to measure the quality of content. It is the distillation of what makes content useful. For years we’ve been directed to make sure our content is high-quality, but there hasn’t been a concise way to encapsulate how to think about producing in-depth, factual, applicable, and readable content.

Content written by experts is more likely to have all the features that will create a positive user search experience. Recognizing this, Google’s goal with content quality ranking factors is to favor content that appears to be written by an expert but does not necessarily have an expert involved.

So when we want to optimize for whatever content quality algorithms might be in Google search, we need to aim for content that clearly and directly addresses the user’s search intent, uses verifiable facts as the basis of the answer, and provides depth in the topics used to deliver a satisfying answer.

Expertise also isn’t just about individual pages, it’s about the pages linked to and by our content. Since the web is a network of documents, each page on our website needs to be high quality, because users are likely to navigate to any of our other pages. Google recognizes this and doesn’t want to send users to pages that link to low quality pages.

Authoritativeness

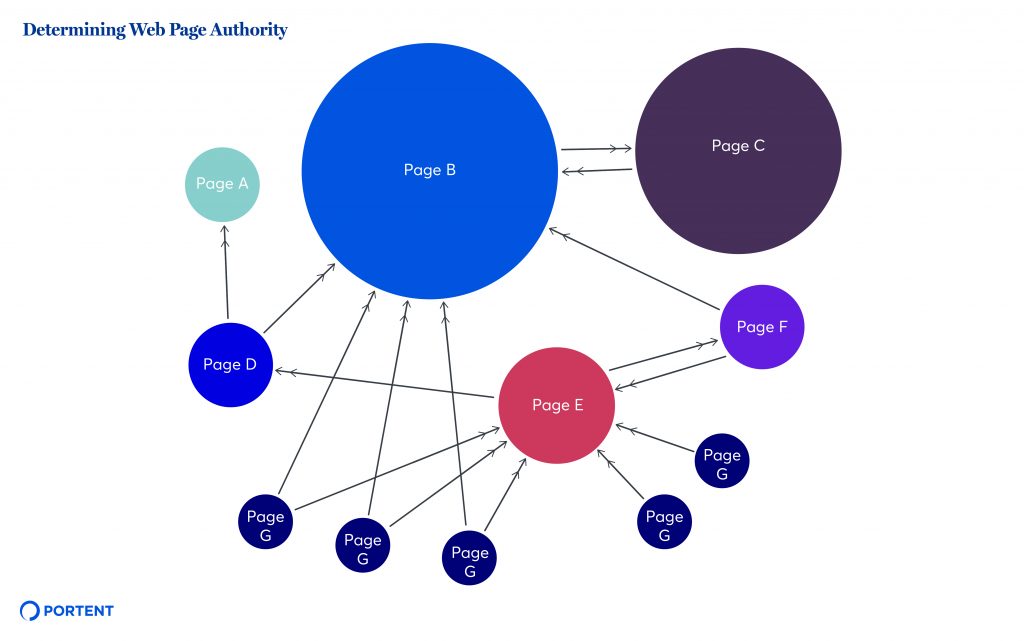

The concept of web page authority isn’t new for Google. It goes back to attempting to model it with PageRank. One of the ways of determining whether a brand is an authority on a topic is by looking at how people say they are. Ranking factors that are used in determining authority are based on detecting backlinks, mentions, and brand searches.

Authority isn’t simple popularity though. This video by Matt Cutts goes into how Google approaches the problem of separating authority from popularity. In summary, the difference is authority is not where people go the most, but where they link and how.

Cutts also goes on to explain how authorities in one topic are not necessarily authorities in every topic. The way Google tries to determine who is relevant for which topic is by the context of the link or mention.

Trustworthiness

Who is more likely to give accurate information for the query “can dogs eat garlic?” The American Kennel Club or TacomaDogGuy1982 on Reddit? It’s pretty important, all of the alliums are toxic to dogs, including onions and shallots, so the answer might be life or death for a user’s pet.

Figuring out who is trustworthy enough for queries that will affect users’ health or wealth is a relatively new focus area for Google. The ways Google determines trust aren’t exactly known, but there are likely avenues. Reviews, positive mentions, having verifiable facts in content, links from newspapers and magazines, and links from other trusted websites are plausible external signals.

Internal factors contribute to trustworthiness too. Websites with missing information about who operates the website, a missing privacy policy, lots of grammatical errors, plagiarized content, security problems, and machine-generated content are some of the features that correlate with untrustworthy websites.

Top 9 Studies About Ranking Factors

Google has claimed for years that its ranking algorithm relies on more than 200 secret ranking signals, but which ones matter the most? This is a tough question to answer and it depends on the query, where some ranking factors are more important to users than others.

So which factors are important in general? People have attempted to answer this question over the years, and often the best we can do are correlation studies.

All a correlation study attempts to do is find strong connections between website features and ranking position. An important thing to consider is that correlation is not causation, i.e. just because two phenomena occur together frequently doesn’t necessarily mean one is caused by the other. It’s possible there is a third factor happening that causes both, or the phenomena reinforce each other.

For example, the number of backlinks pointing to a page is correlated well with high rankings. We usually take it as a given that backlinks cause high rankings, but a page might rank well because the domain belongs to a popular brand and the content is very relevant to the search query. In fact, pages that rank in the top 10 search results receive more links because they are so visible. When people look for a source of a claim or an example of something, the first place they go is often Google search. This virtuous cycle could explain why these pages tend to rank.

Linked below are nine correlation studies that we think do a good job of showing connections between likely ranking factors and rankings. However, we should be critical while interpreting them because causation is hard to prove, but also that these features are overrepresented because they are the easier ones to measure.

- SEO Ranking Factors & Correlation, by Moz

- What Do Correlation Metrics Really Tell Us About Search Rankings, by Sparktoro

- Ranking Factors 2.0, by SEMrush

- Ranking Factor Studies, by Botify

- Ranking Factors, by Search Metrics

- Do Social Signals Influence SEO, by Cognitive SEO

- Do Links From Pages With Traffic Help You Rank Higher, by Ahrefs

- YouTube SEO Ranking Factor Study, by Briggsby

- Search Engine Rankings Study, by Backlinko

How SEO is Tied to the Marketing Stack

It’s not enough to talk about ranking factors and what search engines are trying to do, we need to make changes to our websites and drive qualified users to conversion. The way we model the organic search channel at Portent is through the Marketing Stack.

The Marketing Stack is a concise way of explaining the material basis of digital marketing by dividing up the complex parts into areas of concern, and finally showing the dependencies between them. For organic search (an earned media channel), we rely heavily on the foundation of content, analytics, and infrastructure but we also rely on owned media.

Content

We’ve heard Ian Lurie say a few times in the past, “marketing without content is just yelling.” And in some ways organic search without content is just yelling and waving your arms at Google headquarters.

There is a type of magical thinking that is still seen on the web where content serves SEO as just a place to dump keywords into text. As if, no user cares how well the text addresses their query, they just want a page that gives them a big orange button to click. Nothing could be further from the truth.

Content is what separates the modern organic search marketer from the keyword and link spamming ripoff artist of the past. Standing out in the search results (and the job and agency markets) depends on determining what users want to know and delivering it to them. If we help solve the user’s problem, describe our offer in clear terms, and present it all in a design that’s easy to navigate, we are going to outperform anyone else on the SERP who doesn’t grasp this.

The oldest ranking factors like title tags, H1 tags, and anchor text are based on helping the user understand the website and telling them what they will find. These are only ranking factors because they help users, not because search engines decided that these are the best places to put keywords.

Analytics

Determining what success looks like and measuring it is the primary concern of Analytics. For organic search, we have to consider keyword rankings and share of voice as pre-click metrics in addition to traditional post-click metrics like conversion rate and bounce rate.

Since search engines aren’t as forthcoming for organic search as they are for paid search, organic search marketers have to rely on third-party tools to get an idea of overall and competitor performance. Even though Google Search Console will report on clicks, impressions, rankings for keywords, and URLs, the numbers are sampled and filtered in a way to make them not totally reliable.

Getting an accurate and comprehensive picture of the organic landscape means tracking rankings for many keywords, and not just for your website but your competitor’s too. At Portent, we use STAT for rank tracking because it provides a very handy visibility metric called share of voice. It’s a summary metric that incorporates the estimated click-through rate and search volume of tracked keywords into a percentage to see which websites are likely receiving the most clicks.

Analytics for SEO is not just about performance, it’s also about diagnostics. Auditing a website is not just about providing a spreadsheet of error reports from a crawling tool. Website audits should be progressively-detailed and contain recommendations for resolving the errors in those spreadsheets.

Infrastructure

The classic technical SEO concerns of crawling and indexing live in Infrastructure. Naturally, if a search engine can’t see or interpret our content, how can we hope to rank anywhere? If an SEO doesn’t understand the connection and transmission from a server to a browser, how can they ever resolve a crawling or indexing issue?

The necessity of understanding the technology of the web is vital to the organic search marketer, not just for what search engines perceive but what users experience as a page loads. This is why SEO has always been one of the most technical marketing channels.

Few other digital marketers necessarily need to have a general understanding of how servers and web browsers work. With the advent of JavaScript rendering, structured data markup, and Core Web Vitals, JavaScript joins HTML and CSS as a must-know language for organic search marketers. This must be why site performance is typically thought of as a responsibility for SEOs.

However, more digital marketers should have a technical understanding of web technologies. One example of this is site speed. It’s not just a ranking factor, it’s an everything factor. Every channel is impacted by how quickly a page loads and that translates into worse conversion rates for slower pages, because not every user will wait around for a slow page to load.

A Framework for a Goal-First SEO Strategy

Now that we’ve covered why organic search is essential, what search engines want to rank, and the environment we work in, it’s time to wrap it up with how to take action.

Here’s an outline of how we plan our SEO work at Portent. You can use this as a starting point; it maps pretty well to whatever agile-like project management scheme your organization might be using:

- Determine goals. Typically, the goal of a website will be to get customer signups or collect leads. This isn’t always the case, so it’s important to define exactly what you want your users to do on the website if the point of sale is not the website.

- Decide on KPIs. What does success look like, and how are you going to measure it? YoY gains or numerical targets are the most common. These will go into reporting later.

- Perform keyword research. Find sets of keywords that are both opportunities for growth and that are being used by the people likely to complete website goals. Map those keywords to target landing pages.

- Audit the website. Audit either the whole website, or just the relevant parts. Some websites are too large to be audited entirely in one go. The objective here is to find improvements that can be made on your website that will improve keyword rankings or otherwise contribute to website goals.

- Organize improvements into projects. Group the improvements in the audit into thematic blocks of achievable scope. Rewriting all the meta tags of a large site may not be feasible, but dividing the tags by site section might be.

- Prioritize projects. Estimate the gains in traffic and conversions of each project so you can work on what will make the biggest impact first. Are there any projects that rely on others to be completed first?

- Divide the project into tasks. Identify who needs to complete each task, estimate hours to complete them, figure out resources and approvals you are going to need, and write briefs for the tasks that need to be delegated out.

- Schedule tasks 30, 60, and 90 days in advance. See what you can fit into the next calendar or fiscal quarter, and everything else should be assigned to future quarters. Do another 30-60-90 planning session each month.

- Document the quarterly plan. Write it up to the general standard of the organization, usually this is a roadmap spreadsheet.

- Do monthly and quarterly reporting. Review KPIs and crawl your website each month to monitor progress to goals and find any new website issues. Do a quarterly business review presentation for stakeholders to go over high-level progress to goals.

Search Engine Optimization is a Practice

SEO isn’t a role or a job, or even a growth-hack trick to get more traffic. It’s a practical application of theory to websites. SEO isn’t limited to marketers either. Product managers, developers, and generalist marketing managers can do it too. Anyone who is improving the way search engines and search engine users understand a website is doing SEO.

Hopefully, with this guide, you will be able to bring the practice to your organization and take advantage of the profitable traffic it can bring in.

If you’d like to learn more, we’re always adding SEO-related content to our blog. And, we also offer SEO services for companies big and small. If you’re interested in working with Portent, reach out!