Your A/B testing strategy is only as strong as the ideas you are testing. A strong A/B test idea combines business impact and improvement to the user experience. Without both, your A/B test idea is either destined to produce mediocre results, or is based on exploiting a dark pattern.

So how do you consistently develop ideas that deliver for both users and the people that sign your check? The best way to generate highly impactful A/B test ideas is to conduct qualitative user research to inform your testing strategy.

You may be thinking, “I don’t know. I think we come up with some pretty good CRO testing ideas all on our own.”

Consider this: users are responsible for every single conversion on your site. Without understanding what the user needs, their behaviors, or how they view your site, you’ll never be able to truly optimize the experience of achieving a conversion goal. You simply can’t get by on your intuition and chutzpah.

Let’s examine some common methods of generating A/B test ideas to better understand where they succeed in the A/B test ideation process and where they fall short.

How Are You Coming Up With Your A/B Test Ideas?

Heuristic Assessment

A/B testing ideas often come from heuristic evaluations. A CRO professional examines a page and compares it against a set of UX design principles. While this method may generate a large number of ideas quickly, the strength of those ideas varies greatly based on the expertise of the CRO professional. Heuristic evaluations are best for identifying glaring optimization opportunities, but should not be relied on for developing your A/B testing strategy.

Competitive Analysis

CRO practitioners often look to competitors for A/B testing ideas. Understanding the competitive landscape is important, but there is no way to tell if those designs actually help your competitors achieve their conversion goals. Your A/B testing strategy needs to be based on your users and your unique opportunities. Competitive analysis works best for inspiring variations to fix known problems rather than generating new UX optimization ideas.

Brainstorming Sessions

Brainstorming sessions can be useful for introducing a fresh set of eyes in the ideation process. These meetings bring stakeholders from different departments together to explore opportunities to improve a page. While this method brings a more diverse perspective, it doesn’t mean you’re capturing the insights of your user base. Additionally, stakeholders are often too close to a product to accurately diagnose UX design opportunities. This method is most effective for generating a set of elements and interactions to investigate further.

Analytics Assessment

To improve the quality of ideas generated using any of these methods, CRO practitioners perform an analytics assessment. Google Analytics and other web analytics platforms provide excellent insight into performance metrics and highlight experiences with lower than expected conversion rates. This quantitative data is often paired with more user-focused tools to uncover behavioral context.

Heat mapping tools like Hotjar and FullStory show you data visualizations of user activity within your site and watch recordings of their individual sessions. While this can uncover general user behavior patterns, this method won’t tell you why those patterns occur. Even when paired with on-site visualizations, an analytics assessment will only tell you what users are doing, not the problems they face, or how to address them. These assessments should be used as a jumping-off point for additional research and to validate assumptions about user behavior trends.

Where These Methods Fall Short

The issue with relying on these methods alone is that none of them tell you why users do or do not convert. These ideation techniques are great guideposts to point you in the right direction, but they can’t bring you straight to the answer. That’s where qualitative user research comes in.

Qualitative UX research is a GPS controlled by your users and designed to bring you to the reasons behind their actions. It is the only way to understand why users behave the way that they do.

Now that you’re warming up to the idea of conducting user research, let’s talk about the specific impact this research can have on your A/B testing strategy.

The Impact of Qualitative UX Research

At Portent, we’ve seen the value of qualitative user research firsthand. Our program has found that A/B test ideas based on user experience research include winning variations in 80.4% of tests. Compared to our overall average test win rate of 60%, that’s a 34% lift.

If that’s not exciting enough, we’ve found that user research-based test ideas can have a larger impact on client KPIs compared to traditionally-sourced ideas.

We conducted a series of user experience tests on a streaming service client’s site to understand how users browsed through their content before signing up for a free trial. One important finding was that many users didn’t realize that they could preview content before signing up. This inspired our team to investigate solutions for this lack of awareness.

We ran an A/B/n test—an A/B test with more than one variation—on the landing page in which we included a CTA in the hero region for browsing and previewing videos. Every variation in this test beat the original for trial signups and video previews watched. One variation showed an increase in video previews of 89.87% and an increase in total trial signups of 9.89%. You can see the original CTA and the winning variation below:

This test would never have happened if we didn’t conduct the qualitative user research that inspired it. That same study generated more than 15 other impactful A/B test ideas, and the findings have sparked conversations in cross-functional teams to strengthen earlier marketing funnel activities. Not only does user research improve your CRO testing efforts, but it can also affect your entire digital marketing strategy!

So, now that you’re completely sold, let’s look at how any size organization or team can get started with qualitative user experience research.

Qualitative UX Research Methods

On-Site Surveys

The easiest entry point to user research for any A/B testing program is the on-site survey. Analytics tools used for screen recordings and heat maps often have the ability to inject short surveys directly into your website. These surveys appear to users you target and can answer specific questions about the user experience or collect large-scale single question surveys like Net Promoter Score.

An on-site survey can quickly indicate whether your assumptions about user expectations are accurate. This insight can save you from wasted effort spent on incorrect guesses.

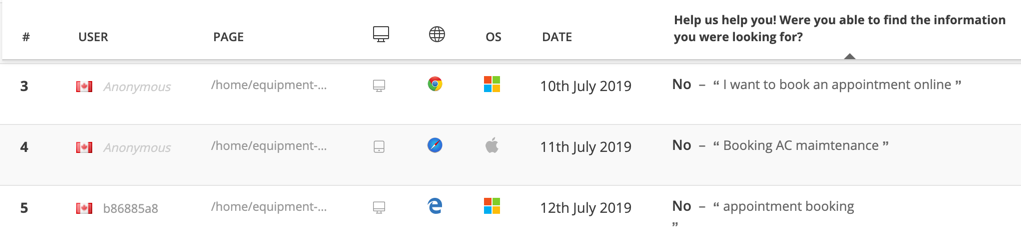

In the on-site survey below, we wanted to confirm whether a phone call button was attracting users interested in purchasing a product for this large HVAC client. We found that the majority of users clicked this button to answer billing- and payment-related questions. This allowed us to pivot our plans and focus on alternate methods of funneling purchase-focused users to contact information.

On-site surveys can also be used to collect broader buckets of general feedback. This feedback is fantastic for sparking completely new A/B testing ideas. You can run on-site surveys over long periods of time to capture qualitative user experience data that isn’t targeted to a specific element. We often deploy surveys like the one below, which was for that same HVAC client, that simply ask, “Were you able to find everything you need?” The feedback we received was used to inform our A/B testing strategy as well as our content strategy to design clear paths for appointment booking.

We’ve found that the most successful on-site surveys ask simple questions that don’t require much effort to complete. Refrain from complex, open-ended questions and long lists of multiple-choice options.

Most users will only respond if it doesn’t interrupt what they are trying to accomplish. With this in mind, it’s important to consider how these surveys will be deployed on your site.

With most free tools, you can set a survey to deploy after the user has scrolled down half of the page length or has been on the page for a set amount of time. Advanced and paid tools like Hotjar and Mouseflow allow you to set surveys to deploy after users complete specific actions. The options will vary based on the tool, but always make sure to consider which conversion behaviors may be impacted when choosing where and how to launch an on-site survey.

Competitive Analysis and Design Preference Surveys

For this blended qualitative and quantitative user research method, you should start with a very specific element you want to investigate. Perhaps a heuristic assessment indicated that your product cards need optimization. In this case, you would perform a competitive analysis focused on the product cards of your competition.

In analyzing their designs, list out the different pieces of information, types of interactions, and user interface elements that are used. Keep track of every competitor site that uses each of these features. Organizing this information into a spreadsheet will give you a holistic view of how often a feature occurs and where your site experience may be falling short. You can see our competitive analysis approach applied to a national tire retailer here:

The next step is to create a new version of your site’s design for this element. This design should incorporate any missing common features that your competitive analysis identified. Use the best competitor versions of each feature for inspiration, but keep your own site styles and patterns in mind when creating your variation. The new design will be used in the final phase of this user research project, the design preference survey.

A design preference survey is a survey in which users look over multiple designs for the same page or element. They are asked about their impression of the designs and what they preferred about each. The goal of this survey is to understand which features of the designs best meet the needs of users as they attempt to achieve a conversion goal.

For CRO testing, use a survey tool like UsabilityHub to create a design preference survey, comparing your site’s current element design with the variation you’ve created based on the competitive analysis. User assessments of both designs will provide clear indicators of UX design changes that should be prioritized.

In the example below, we were able to narrow our A/B testing approach for that same national tire retailer to focus on star ratings and cost presentation, deprioritizing lower-impact feature optimizations.

As with any survey, be sure to write questions that limit bias and provide participants with the space to give productive feedback. SurveyMonkey has a fantastic guide to designing surveys that can be used to steer your question writing process.

To generate highly impactful results, make sure to pair quantitative data collection with qualitative questions. After asking participants to rank the value of features, add a follow-up question that lets them tell you why they found particular features useful. This blending of quantitative and qualitative research is what makes the design survey a powerful tool for your A/B testing strategy.

User Interviews

User interviews are one of the more time-intensive user research methods you can pursue, but the benefits are often well worth the wait. The results of user interviews can generate a large number of ideas and insights that benefit your entire digital marketing team.

Effective user interviews for a CRO testing program are focused on answering questions about user needs when trying to complete a larger goal that your website fits into.

Instead of asking users questions that are highly specific to your website, you should ask questions about the circumstances in which they might use your website. Discovering information about their motivations, information needs, and external influencers can dramatically shape the way you think about new A/B testing ideas.

When designing user interview questions, consider how to gather detailed responses from your participants. Interview questions should be open-ended, exploratory questions. These often lead to tangents and follow-up questions that can quickly sap your interview session time. Try to narrow your list to a few deep questions so that you don’t get cut short during your limited interview session length.

Another important consideration is the phrasing of questions. When you ask questions like, “How do you use our website?” you get answers like, “I use it mostly to book appointments.”

Rephrasing your questions to focus on specific past experiences will improve the quality of your responses. Asking someone to “Describe the last time you used our website.” will result in longer, more detailed answers that open the floor for rich follow-up questions.

Recruiting participants is another vital aspect of conducting user interviews. To generate results that inform A/B testing strategy, you need participants that closely align with the users of your website. If your organization or client uses personas, recruit participants that match these detailed customer descriptions. If you do not have personas, demographic or market research can inform your selection of participants.

We recommend recording user interviews to allow you to maintain a natural conversation and avoid taking notes during the session. It is important to remain present and listen actively during interviews so that you are ready when opportunities for follow-up questions arise.

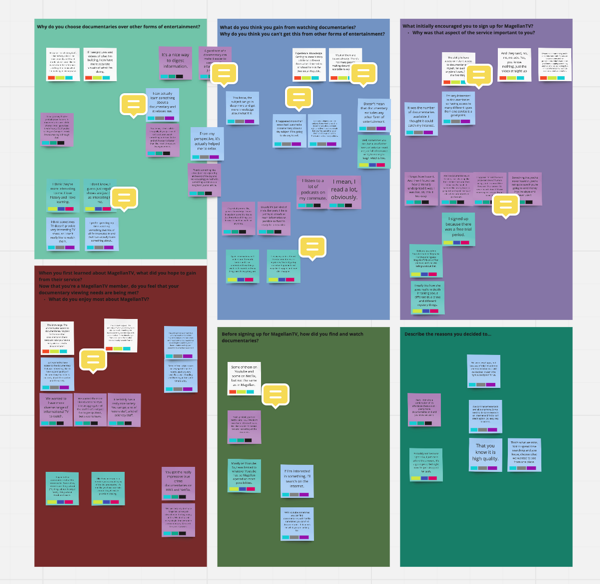

Session recordings can be analyzed later to identify patterns that can spark new A/B testing ideas. At Portent, we use a method called affinity mapping to uncover these patterns.

Affinity mapping is a process in which you record significant quotes from sessions on sticky notes (real or virtual) and begin to group them by common themes. This method allows you to quickly recognize overarching insights across participants and is great for analyzing user interview data collaboratively. Below is an example of the affinity map from user interviews for our documentary streaming client:

When you’ve finished analyzing your interviews, you will have a greater understanding of the context in which your website meets user needs. Try blending this qualitative research with a quantitative analytics assessment to validate your findings and craft highly impactful CRO testing ideas.

Usability Testing

Of all the methods discussed in this post, the most effective for informing your A/B testing strategy is usability testing.

In a usability test, you get the chance to watch users complete an activity within your site and discuss the experience with them. Not only does this give you the opportunity to view what users do in context, but it also allows you to narrow in on understanding why they do it.

Usability testing requires many of the same considerations as user interviews. Recruiting should be focused on participants that closely mirror your target users. In designing your questions, you should be even more precise with the time you have in each test session. Consider the length and complexity of the flow you are testing and be sure to factor in additional time for user error.

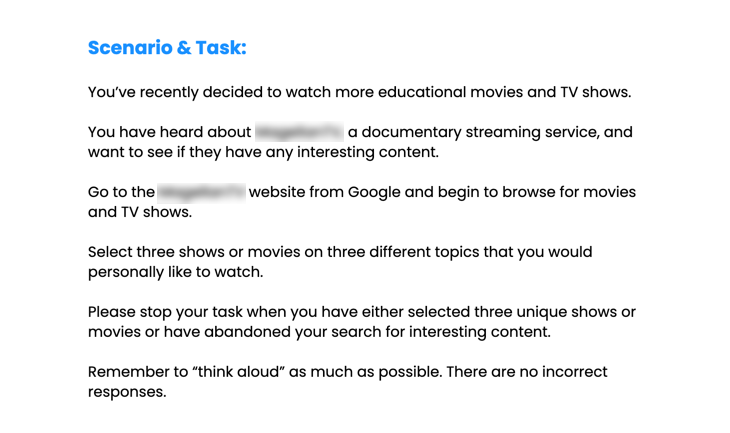

The major difference between usability testing and the other qualitative user research methods we’ve discussed is the task. In a usability test, you are asking a participant to complete one or more specific tasks within your site. Task design requires you to write out a scenario that prompts the participant to complete a set of actions. Your tasks should be simple so that participants can understand and remember them throughout the usability test. Tasks should be paired with a scenario that gets users in the proper mindset to complete the task. The example task below is from a usability test for our documentary streaming service client. It establishes context for the participant and provides clearly outlined instructions to complete a series of actions on the website.

Build time into each session for both pre- and post-task questions.

Pre-task questions are an opportunity to understand your participant’s background and experiences related to the website you’re testing. Ask about the last time they completed a similar task or their familiarity with the website you are testing. This will provide further context for your analysis of the usability test results.

In post-task questions, you are specifically asking participants about their experience completing the usability test. Common post-task questions that we ask include:

- Describe your overall experience with this flow.

- Was there anything that stood out to you?

- Was there anything that you found discouraging within the page/flow?

- Was the page/site organized the way that you expected it to be?

- Did you find anything unnecessary or distracting within this page/flow?

As with user interviews, recording sessions is highly recommended. At Portent, we use Zoom to record usability test sessions. The screen sharing feature of Zoom allows us to record a participant’s screen activity throughout the user test. This ensures that quotes from sessions are not taken out of context and that behaviors, as well as thought patterns, can be analyzed.

Affinity mapping is a great tool for analyzing the results of your usability tests. We recommend grouping quotes by page of the flow or by specific tasks first, then sub-grouping into additional themes.

When you’ve finished analyzing your usability test sessions, you will have collected a valuable set of insights into the user experience of your website. The problems participants faced in sessions can become the basis of your next A/B testing ideas, and the behavioral insights can often be applied to multiple pages, flows, and clients. These ideas are particularly valuable because they come directly from the source and are highly specific to your website’s user experience.

If usability testing sounds like too much of a time commitment, keep in mind that a usability test for conversion rate optimization can focus on a highly specific flow. For example, if a quantitative assessment reveals high shopping cart abandonment rates, you can focus solely on the experience of completing the checkout process instead of the entire flow of searching for, selecting, and completing a purchase. This can save you time and keep results within the scope of elements you can test.

The Wrap Up

It may seem like a daunting task, but for CRO testing, the scope of UX research is narrow and the methods are highly adaptable. User experience research for UX optimization is truly as simple as creating space to listen to users.

CRO is about incremental changes that improve the user experience of achieving a specific goal. The only way to improve the user experience is to understand it. While quantitative analysis is useful for informing your CRO testing strategy, blending quantitative and qualitative research is the best way to generate A/B testing ideas that truly meet user needs.

The methods can be as simple or as complex as your situation demands. The important thing to remember is that not talking to your users is not tapping into the most vital resource your A/B testing program has.

Thank you for this valuable information!! Simply I want to say that your article is very helpful, it is very clear.