As early as four weeks ago, our VP wrote about Where to Find “The Why” Behind Your Marketing Performance, a great look into Portent’s motivation for providing meaningful results and sustaining a learning environment in a harmonious balance. Conversion Rate Optimization is no stranger to this challenge. Strategists in this industry must make a good case for why there should be changes to a website while considering how it will be done.

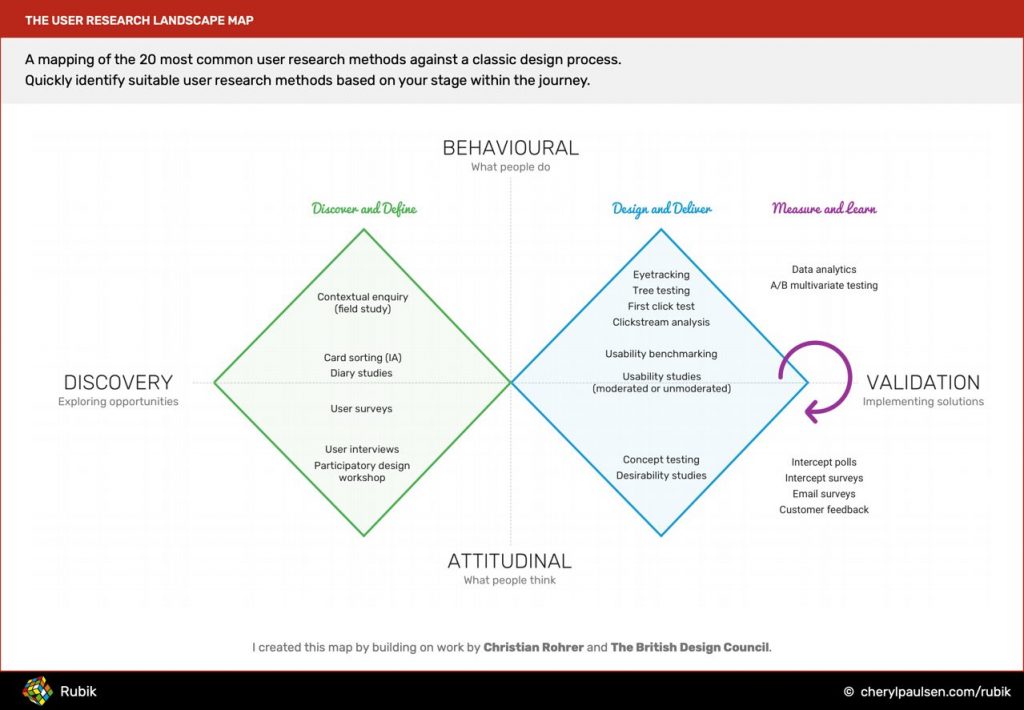

To address a user’s needs and resources, CRO strategists tap into numerous methods of testing and analysis, some of which involve qualitative research most commonly utilized in user-centered design and user research. To illustrate how and when these tests are considered, check out the following image on The British Design Council’s double-diamond approach to user-centered design.

Here’s Something to Know about CRO Strategists

Contrary to popular belief, CRO strategists aren’t always considered UX designers or researchers, and that’s okay. It takes a delicate balance to use so much of our time to conduct the A/B testing we’re known for (the “Measure and Learn” phase of the right diamond) and gather informative insights that support our recommendations (the “Discover and Define” phase on the left diamond).

Our best work shines when we can provide all the evidence needed to optimize conversions and grow the business, and it starts with your users and their personal experience.

The Five Whys of Qualitative Analysis in CRO Strategy

To best explain the balance of user-centered and KPI-centered design in CRO strategy, I will walk you through one of my favorite UX research exercises, “The 5 Whys.”

1. Why Is Qualitative, User-Centered Design Research Essential In Conversion Rate Optimization?

Without user-centered design principles and user experience research, CRO strategists are left with minimal context and data to conclude. Imagine a scenario where a CRO strategist is only presented the page performance data, numbers on a grid, and nothing else.

When CRO strategists look at the existing data from your analytics platforms, they’re looking for all indications along a conversion path where they could make the most improvements in “increasing the percentage of website visitors who take a desired action.” Right away, they may make hypotheses and connections, but they’ll need quite a bit more information to back up potential recommendations. CRO strategists need to conduct an audit of your webpage to understand how it connects to your analytics.

That auditing of your web page includes a heuristic evaluation, a fancy yet purposely specific way of judging how practical and user-friendly something is.

You may not have known it yet, but a heuristic evaluation in itself is qualitative research in user-centered design and a gateway to honing in on analytics and testing strategy. Heuristic evaluations not only help inform AB tests that provide quantifiable results, but also informs user research.

When was the last time your user survey helped inform the layout of your website? When was the last time you heard firsthand about the issues users face? When was the last time your sales and services teams complained about having to answer the same question repeatedly to every customer they engaged with in who-knows-how-long? Should further qualitative user research be a priority for me? If you’re racking your brain, let me set your mind at ease by answering the last question for you:

Yes. Absolutely. It’s all about the whole picture. In a way, CRO strategists not only want to know the outcomes of the Olympic gymnastic events, but they’re also super interested in why the judges were or weren’t wowed by the performances.

Without further qualitative research to support AB test and assessment findings, CRO strategists will find themselves stuck in a cycle of testing for and relying upon the insight numbers provide. It’s a cycle they want to avoid as much as possible; otherwise, they could find themselves doing more harm than good.

2. Why Does CRO Have a Lousy Reputation for Relying on Quantitative Results?

Lousy may be a strong word, but when the average person associates conversion rate optimization with the tools needed to be successful, AB testing is most commonly brought up.

You’ll see this in just about every article you can find on the practice: product/service descriptions or the occasional job listings seeking strategists. The execution of AB testing relies heavily on complex data, but if numbers solely dictate all design changes, CRO strategists find themselves using dark patterns. Dark patterns become a product of this method due to its influence in tricking users to take an action. CRO strategists need more time on opportunities to gather insights on user intent and perspective to establish their trust.

An exclusively numbers-driven, quantitative approach is exemplified in Max. Speicher’s article on KPI-centered design as the antithesis of excellent optimization.

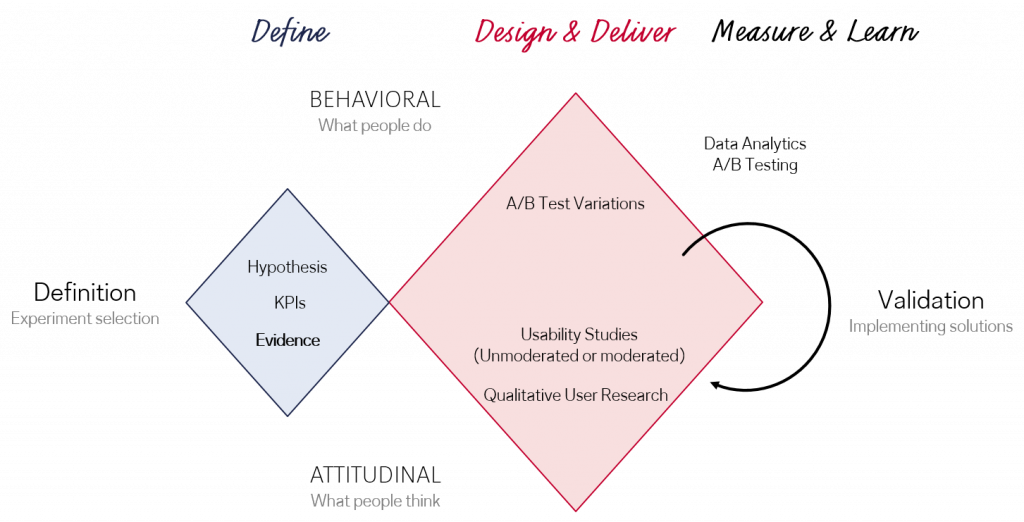

KPI-centered design that leads to a harmful CRO reputation through dark patterns is best illustrated by Spicher’s variation of the double-diamond below:

With the inclusion of user-centered design principles into this heavily favored “Design & Deliver” double-diamond, CRO specialists can apply more insightful results that benefit both business goals and user experience.

3. Why Should a CRO Strategist Incorporate User-Centered Design Into Their Strategy?

Balancing strategy is essential to provide relevant and valuable results. It’s a way a CRO Strategist can curate evidence to further support their test outcomes.

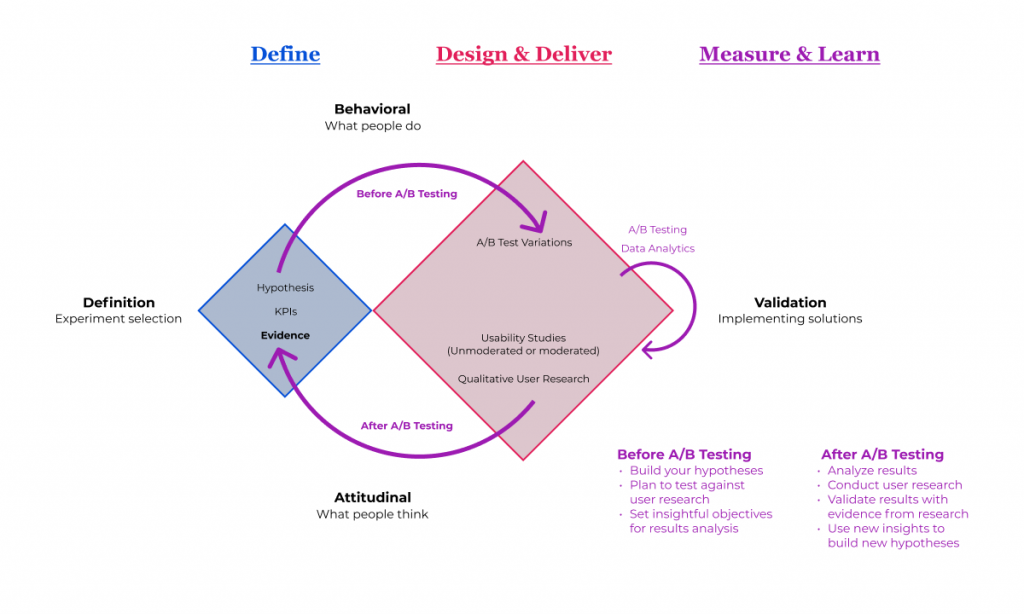

Speicher’s article on KPI-centered design also does a great job of explaining the use of qualitative user research as that evidence. I am convinced that this structure is essential for the future of CRO and digital marketing strategy.

Now we see the use of qualitative research allowing a CRO strategist and the business insight into users’ “attitudinal” approach. Unlike quantitative data that reveals user behaviors (or how they took an action), their attitudes provide a look into why, such as the motivations, intent, and trust in a business’s products and services.

One of the fundamental forces strategists value, brands earn, and users exclusively provide is trust. When conducted with care and patience, various user experience research methods generate a wealth of information and insights that reveal moments in which strategists can establish trust between a brand’s design and its audience.

Another precious insight revolves around user intent and motivation. When presented with the data, CRO strategists have a minimal view of any group of users’ reasons for commitment or abandonment. With user experience research, the sight of those interactions becomes more evident, and optimization strategy earns actionable insights for testing.

The more practical insights CRO strategists can gather from user research means the more informed test results. NNG describes user-centered design as using informative analysis to develop hypotheses and evaluative research to validate those hypotheses.

Once you’ve found the right balance of time and resources to conduct your qualitative research, your last step is to plan.

4. Why Does Planning When to Use User-Centered Design Research Matter?

Like the secret to great comedy, effective CRO strategy involves … timing.

Strict KPI-centered design relies upon metrics and hypotheses based on that data. As we’ve learned, there’s limited opportunity to fully validate testing when all that strategists have are numbers, numbers, and more numbers. Let’s consider how we can plan effective, qualitative user research to set up tests and provide evidence for our findings.

Before A/B Testing

Tests on landing pages can’t always be drawn out of the data we see or historical context. We need hypotheses that are relevant to both business and user goals.

Leveraging informative user research before tests begin will reveal to strategists who, what, and where to design our testing roadmap and, more importantly, why. CRO tests against these hypotheses toward data that will support or disprove, leading to further optimization.

Hark! More data! As strategists continue gathering insights, validation is needed, so now presents an opportunity to continue user research.

After A/B Testing

Not every test can “win” or prove hypotheses, but CRO strategists will always earn new learnings that open the door to opportunities to return to users with more questions with a refreshed approach.

This stage is where validation research shines the most. Strategists can use learnings from their test results to support them further; to uncover the why.

Other insights, having not otherwise proven or learned, set themselves up to be part of a roadmap of tests, and if you’re already asking yourself if I’ve just drawn a cycle of testing, the answer is yes.

This cycle is one of the most harmonious in UX and product design, and conversion rate optimization thrives from the opportunity to use it for their strategies. While CRO strategists can’t always benefit from the kinds of resources and flexibility their UX cousins have invested, they’ll be most grateful to share in that research space and avoid KPI-centered design’s influence of dark patterns. They’ll strive toward providing the best strategy to optimize conversions and their quality.

5. Why Will Relying Only on KPI-Centered Design Hurt the Quality of Conversions in the Long Run?

The biggest problem with KPI-centered design is the notorious dark pattern. Even when looking at the list of common dark patterns, CRO specialists can recognize gaps in informative user research.

Take this example in “confirm shaming” from an insurance add-on to a concert ticket purchase:

The use of “No, don’t protect my $77.08 ticket purchase” could have benefited from a “No, thank you” at the very least to appeal more to users.

KPI-centered design techniques like this are tough on a user who is at the cusp of committing and recommitting themselves to a product or service. If their confidence in a brand suffers at any stage of their engagement, so goes their trust.

In the long run, this loss only means adding to the challenges of establishing trust and confidence from the business’s or brand’s users.

Making Time for User-Centered Design is Essential For CRO

When user-centered design becomes prioritized, planned, and incorporated in CRO strategy, business KPIs not only benefit from how conversions are improving but, most importantly, why. Knowing when to invest this approach to an existing testing plan will help balance the importance of design that improves KPIs and customer satisfaction.

CRO strategists should be aware of all the dangers KPI-centered design presents that disestablish trust and harm conversions. However, with room to integrate user-centered design and support their hard-earned insights through A/B testing, they’ll find the right balance that can satisfy a business’s needs and clientele.